Could the Surgisphere Retractions Debacle Happen Again?

In May 2020, two major scientific journals published and subsequently retracted studies that relied on data provided by the now disgraced data analytics company Surgisphere.

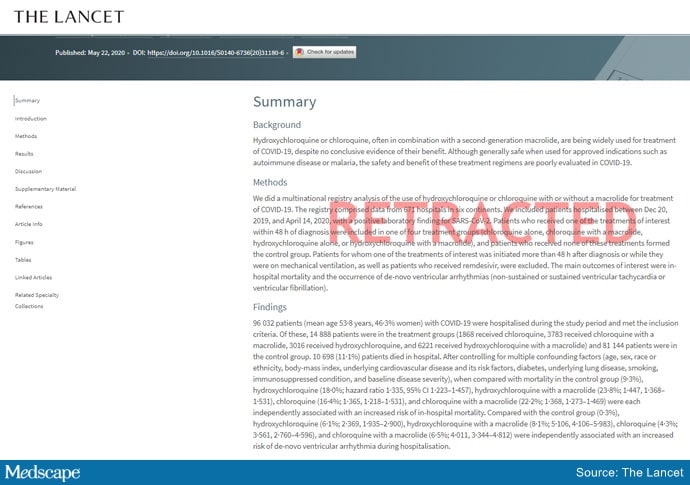

One of the studies, published in The Lancet, reported an association between the antimalarial drugs hydroxychloroquine and chloroquine and increased inhospital mortality and cardiac arrhythmias in patients with COVID-19. The second study, which appeared in The New England Journal of Medicine, described an assocation between underlying cardiovascular disease, but not related drug therapy, with increased mortality in COVID-19 patients.

The retractions in June 2020 followed an open letter to each publication penned by scientists, ethicists, and clinicians who flagged serious methodologic and ethical anomalies in the data used in the studies.

On the 1-year anniversary, researchers and journal editors talked with Medscape about what was learned to reduce the risk of something like this happening again.

Dr Sunil Rao

“The Surgisphere incident served as a wake-up call for everyone involved with scientific research to make sure that data have integrity and are robust,” Sunil Rao, MD, professor of medicine, Duke University Health System, Durham, North Carolina, and editor-in-chief of Circulation: Cardiovascular Interventions, told Medscape Medical News.

“I’m sure this isn’t going to be the last incident of this nature, and we have to be vigilant about new datasets or datasets that we haven’t heard of as having a track record of publication,” Rao said.

Spotlight on Authors

The editors of the Lancet Group responded to the “wake-up call” with a statement, Learning From a Retraction, which announced changes to reduce the risks of research and publication misconduct.

The changes affect multiple phases of the publication process. For example, the declaration form that authors must sign “will require that more than one author has directly accessed and verified the data reported in the manuscript.” Additionally, when a research article is the result of an academic and commercial partnership — as was the case in the two retracted studies — “one of the authors named as having accessed and verified data must be from the academic team.”

This was particularly important because it appears that the academic co-authors of the retracted studies did not have access to the data provided by Surgisphere, a private commercial entity.

Mandeep R. Mehra, MD, William Harvey Distinguished Chair in Advanced Cardiovascular Medicine, Brigham and Women’s Hospital, Boston, Massachusetts, who was the lead author of both studies, declined to be interviewed for this article. In a letter to The New England Journal of Medicine editors requesting that the article be retracted, he wrote: “Because all the authors were not granted access to the raw data and the raw data could not be made available to a third-party auditor, we are unable to validate the primary data sources underlying our article.”

In a similar communication with The Lancet, Mehra wrote even more pointedly that, in light of the refusal of Surgisphere to make the data available to the third-party auditor, “we can no longer vouch for the veracity of the primary data sources.”

“It is very disturbing that the authors were willing to put their names on a paper without ever seeing and verifying the data,” Mario Malički, MD, PhD, a postdoctoral researcher at METRICS Stanford, told Medscape Medical News. “Saying that they could ‘no longer vouch’ suggests that at one point they could vouch for it. Most likely they took its existence and veracity entirely on trust.”

Malički pointed out that one of the four criteria of the International Committee of Medical Journal Editors (ICMJE) for being an author on a study is the “agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.”

The new policies put forth by The Lancet are “encouraging,” but perhaps do not go far enough. “Every author, not only one or two authors, should personally take responsibility for the integrity of data,” he stated.

Many journals “adhere to ICMJE rules in principle and have checkboxes for authors to confirm that they guarantee the veracity of the data.” However, they “do not have the resources to verify the authors’ statements,” he added.

Ideally, “it is the institutions where the researchers work that should guarantee the veracity of the raw data — but I do not know any university or institute that does this,” he said.

No “Good Housekeeping” Seal

For articles based on large, real-world datasets, the Lancet Group will now require that editors ensure that at least one peer reviewer is “knowledgeable about the details of the dataset being reported and can understand its strengths and limitations in relation to the question being addressed.”

For studies that use “very large datasets,” the editors are now required to ensure that, in addition to a statistical peer review, a review from an “expert in data science” is obtained. Reviewers will also be explicitly asked if they have “concerns about research integrity or publication ethics regarding the manuscript they are reviewing.”

Although these changes are encouraging, Harlan Krumholz, MD, professor of medicine (cardiology), Yale University, New Haven, Connecticut, is not convinced that they are realistic.

Krumholz, who is also the founder and director of the Yale New Haven Hospital Center for Outcome Research and Evaluation, told Medscape Medical News that “large, real-world datasets” are of two varieties. Datasets drawn from publicly available sources, such as Medicare or Medicaid health records, are utterly transparent.

By contrast, Surgisphere was a privately owned database, and “it is not unusual for privately owned databases to have proprietary data from multiple sources that the company may choose to keep confidential,” Krumholz said.

He noted that several large datasets are widely used for research purposes, such as IBM, Optum, and Komodo — a data analytics company that recently entered into partnership with a fourth company, PicnicHealth.

These companies receive deidenitified electronic health records from health systems and insurers nationwide. Komodo boasts “real-time and longitudinal data on more than 325 million patients, representing more than 65 billion clinical encounters with 15 million new encounters added daily.”

“One has to raise an eyebrow — how were these data acquired? And, given that the US has a population of around 328 million people, is it really plausible that a single company has health records of almost the entire US population?” Krumholz commented. (A spokesperson for Komodo told Medscape Medical News that the company has records on 325 million US patients.)

This is “an issue across the board with ‘real-world evidence,’ which is that it’s like the ‘Wild West’ — the transparencies of private databases are less than optimal and there are no common standards to help us move forward,” Krumholz said, noting that there is “no external authority overseeing, validating, or auditing these databases. In the end, we are trusting the companies.”

Although the US Food and Drug Administration (FDA) has laid out a framework for how real-world data and real-world evidence can be used to advance scientific research, the FDA does not oversee the databases.

“Thus, there is no ‘Good Housekeeping Seal’ that a peer reviewer or author would be in a position to evaluate,” Krumholz said. “No journal can do an audit of these types of private databases, so ultimately, it boils down to trust.”

Nevertheless, there were red flags with Surgisphere, Rao pointed out. Unlike more established and widely used databases, the Surgisphere database had been catapulted from relative obscurity onto center stage, which should have given researchers pause.

AI-Assisted Peer Review

A series of investigative reports by The Guardian raised questions about Sapan Desai, the CEO of Surgisphere, including the fact that hospitals purporting to have contributed data to Surgisphere had never heard of the company.

However, peer reviewers are not expected to be investigative reporters, explained Malički.

“In an ideal world, editors and peer reviewers would have a chance to look at raw data or would have a certificate from the academic institution the authors are affiliated with that the data have been inspected by the institution, but in the real world, of course, this does not happen,” he said.

Artificial intelligence software is being developed and deployed to assist in the peer review process, Malički noted. In July 2020, Frontiers Science News debuted its Artificial Intelligence Review Assistant (AIRA) to help editors, reviewers, and authors evaluate the quality of a manuscript. The program can make up to 20 recommendations, including “the assessment of language quality, the detection of plagiarism, and identification of potential conflicts of interest.” The program is now in use in all 103 journals published by Frontiers. Preliminary software is also available to detect statistical errors.

Another system under development is FAIRware, an initiative of the Research on Research Institute in partnership with the Stanford Center for Biomedical Informatics Research. The partnership’s goal is to “develop an automated online tool (or suite of tools) to help researchers ensure that the datasets they produce are ‘FAIR’ at the point of creation,” said Malički, referring to the findability, accessibility, interoperability, and reusability (FAIR) guiding principles for data management. The principles aim to increase the ability of machines to automatically find and use the data, as well as to support its reuse by individuals.

He added that these advanced tools cannot replace human reviewers, who will “likely always be a necessary quality check in the process.”

Greater Transparency Needed

Another limitation of peer review is the reviewers themselves, according to Malički. “It’s a step in the right direction that The Lancet is now requesting a peer reviewer with expertise in big datasets, but it does not go far enough to increase accountability of peer reviewers,” he said.

Malički is the co–editor-in-chief of the journal Research Integrity and Peer Review , which has “an open and transparent review process — meaning that we reveal the names of the reviewers to the public and we publish the full review report alongside the paper.” The publication also allows the authors to make public the original version they sent.

Malički cited several advantages to transparent peer review, particularly the increased accountability that results from placing potential conflicts of interest under the microscope.

As for the concern that identifying the reviewers might soften the review process, “there is little evidence to substantiate that concern,” he added.

Malički emphasized that making reviews public “is not a problem — people voice strong opinions at conferences and elsewhere. The question remains, who gets to decide if the criticism has been adequately addressed, so that the findings of the study still stand?”

He acknowledged that, “as in politics and on many social platforms, rage, hatred, and personal attacks divert the discussion from the topic at hand, which is why a good moderator is needed.”

A journal editor or a moderator at a scientific conference may be tasked with “stopping all talk not directly related to the topic.”

Widening the Circle of Scrutiny

Malički added, “A published paper should not be considered the ‘final word,’ even if it has gone through peer review and is published in a reputable journal. The peer review process means that a limited number of people have seen the study.”

Once the study is published, “the whole world gets to see it and criticize it, and that widens the circle of scrutiny.”

One classic way to raise concerns about a study post publication is to write a letter to the journal editor. But there is no guarantee that the letter will be published or the authors notified of the feedback.

Malički encourages readers to use PubPeer, an online forum in which members of the public can post comments on scientific studies and articles.

Once a comment is posted, the authors are alerted. “There is no ‘police department’ that forces authors to acknowledge comments or forces journal editors to take action, but at least PubPeer guarantees that readers’ messages will reach the authors and — depending on how many people raise similar issues — the comments can lead to errata or even full retractions,” he said.

PubPeer was key in pointing out errors in a suspect study from France (which did not involve Surgisphere) that supported the use of hydroxychloroquine in COVID-19.

A Message to Policymakers

High stakes are involved in ensuring the integrity of scientific publications: The French government revoked a decree that allowed hospitals to prescribe hydroxychloroquine for certain COVID-19 patients.

After the Surgisphere Lancet article, the World Health Organization temporarily halted enrollment in the hydroxychloroquine component of the Solidarity international randomized trial of medications to treat COVID-19.

Similarly, the UK Medicines and Healthcare Products Regulatory Agency instructed the organizers of COPCOV, an international trial of the use of hydroxychloroquine as prophylaxis against COVID-19, to suspend recruitment of patients. The SOLIDARITY trial briefly resumed, but that arm of the trial was ultimately suspended after a preliminary analysis suggested that hydroxychloroquine provided no benefit for patients with COVID-19.

Malički emphasized that governments and organizations should not “blindly trust journal articles” and make policy decisions based exclusively on study findings in published journals — even with the current improvements in the peer review process — without having their own experts conduct a thorough review of the data.

“If you are not willing to do your own due diligence, then at least be brave enough and say transparently why you are making this policy, or any other changes, and clearly state if your decision is based primarily or solely on the fact that ‘X’ study was published in ‘Y’ journal,” he stated.

Rao believes that the most important take-home message of the Surgisphere scandal is “that we should be skeptical and do our own due diligence about the kinds of data published — a responsibility that applies to all of us, whether we are investigators, editors at journals, the press, scientists, and readers.”

Rao reports being on the steering committee of the NHLBI-sponsored MINT trial and the Bayer-sponsored PACIFIC AMI trial. Malički reports being a postdoc at METRICS, Stanford in the past 3 years. Krumholz received expenses and/or personal fees from UnitedHealth, Element Science, Aetna, Facebook, the Siegfried and Jensen Law Firm, Arnold and Porter Law Firm, Martin/Baughman Law Firm, F-Prime, and the National Center for Cardiovascular Diseases in Beijing. He is an owner of Refactor Health and HugoHealth and had grants and/or contracts from the Centers for Medicare & Medicaid Services, the FDA, Johnson & Johnson, and the Shenzhen Center for Health Information.

Follow theheart.org | Medscape Cardiology on Twitter.

Follow Medscape on Facebook, Twitter, Instagram, and YouTube.

Source: Read Full Article