Real-time molecular imaging of near-surface tissue using Raman spectroscopy

Modern imaging modalities have facilitated a steady progress in medicine and treatment of diseases. Among them, Raman spectroscopy has gained attention for clinical applications as a label-free, non-invasive method to deliver a molecular fingerprint of a sample. Researchers can combine such methods with fiber optic-probes to allow easy-access to a patient’s body. However, it is still challenging to acquire images with fiber optic probes. In a new report published in Nature Light: Science & Applications, Wei Yang and a team of scientists, at the Leibniz Institute of Photonic Technology in Germany, developed a fiber optic probe-based Raman imaging system to visualize real-time, molecular, virtual reality data and detect chemical boundaries.

The researchers developed the process around a computer-vision based positional tracking system with photometric stereo and augmented and mixed chemicals for molecular imaging and direct visualization of molecular boundaries of three-dimensional surfaces. The method provided an approach to image large tissue areas in a few minutes, to distinguish clinical tissue-boundaries in a range of biological samples.

Imaging modalities and designing molecular virtual reality images in biomedicine

Physicians typically use magnetic resonance imaging, computed tomography, position-emission tomography and ultrasound to screen patients for disease diagnosis and evaluation. The method can be improved for precise, guided-surgery to facilitate continuous, non-invasive monitoring of patients. Current imaging techniques are primarily based on the anatomy and morphology of tissue, without considering the underlying tissue composition. Researchers have emphasized Raman-based methods for clinical in vivo applications; a method based on inelastic scattering between a photon and a molecule to provide an intrinsic molecular fingerprint of a sample. The information is gathered via label-free, contactless and non-destructive methods to detect and delineate cancer from healthy tissues and scientists have explored the potential of the method for data visualization and comprehension.

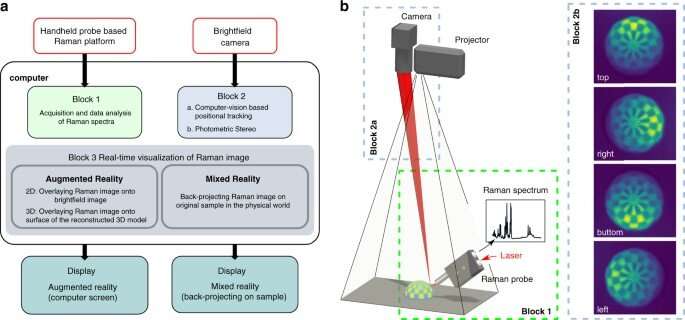

In this work, Yang et al proposed and developed a fiber-based Raman imaging method with real-time data analysis, in combination with augmented reality and mixed reality, on three-dimensional (3D) sample surfaces as a potential method to conduct real-time, opto-molecular visualization of tissue boundaries for disease diagnostics and surgery. In this method, the team combined Raman spectra measurements with computer vision–based positional tracking and real-time data processing to develop molecular virtual reality images. The work provides a recap for future clinical translation of real-time Raman-based molecular imaging to provide easy-access to patients.

Imaging of a 3D structured bio-sample surface and ex-vivo tumor tissue

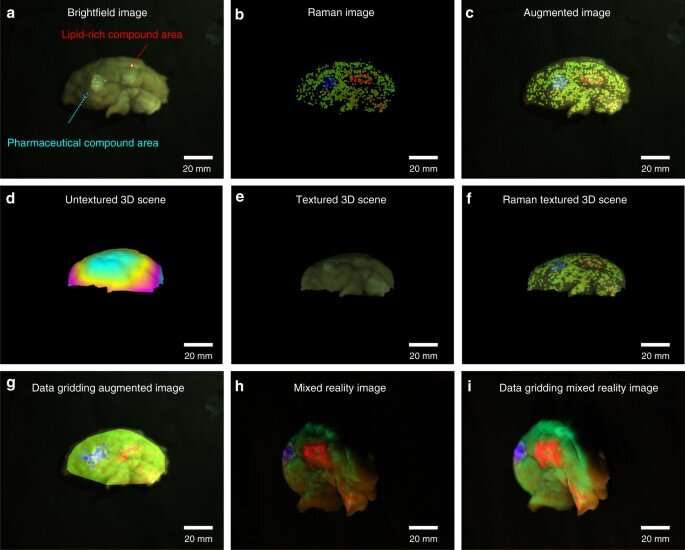

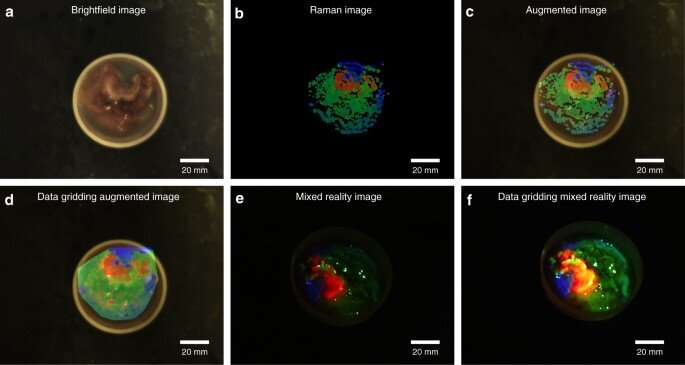

Yang et al used the proposed approach on porcine cerebrum and sarcoma tissue to differentiate molecularly distinct regions. They coated the cerebrum with a lipid-rich, pharmaceutical compound and scanned the brain surface to visualize the topology and molecular information, which they mapped onto the reconstructed 3D mode with molecular data. The combined data provided a realistic view of chemical distribution on the surface. Similarly, with tumor samples, Yang et al. excised a sample size and conducted laser acquisition to show molecular boundaries within the pathological tissue for image-guided in vivo disease diagnostics and surgical resection.

Data flow

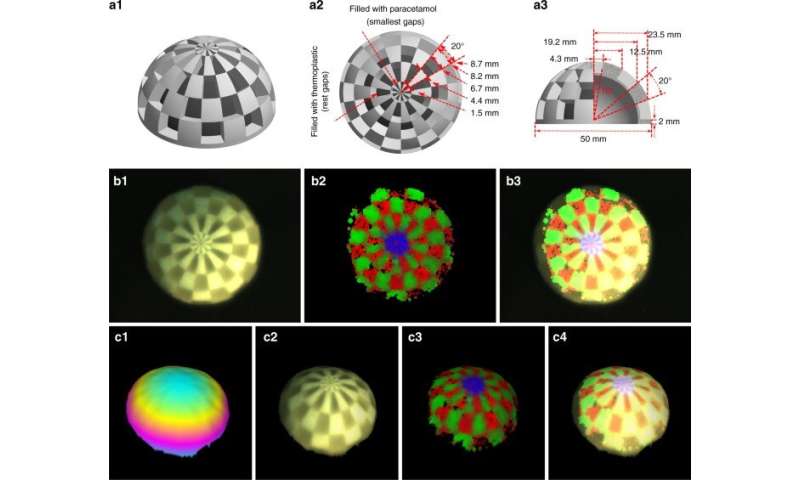

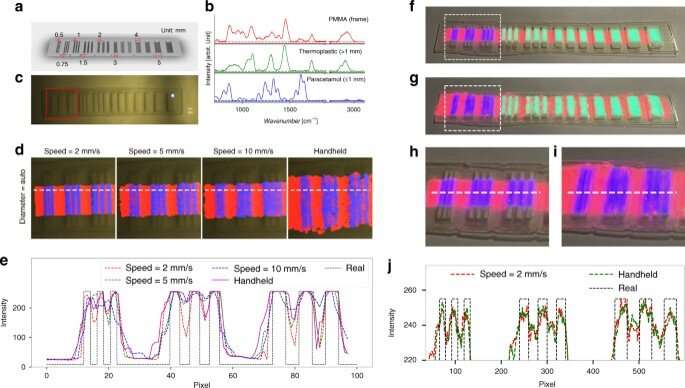

The team visualized the molecules using augmented reality (AR) and mixed reality (MR), alongside topographic reconstruction and real-time data analysis. During AR, the scientists mapped the molecular information on the bright field image or a 3D surface model. During MR, they projected the molecular information on to the sample, and registered the projected image in several steps. In order to avoid disturbances of laser tracking, the team reduced the intensity of the projected image by adjusting the transparency of the setup. The scientists next characterized the instrumentation for three-dimensional (3D) reconstruction, and showed how the experimental distortions agreed with theoretical simulations. They minimized the distorted effects by increasing the ratio between the height of the camera (projector) and thickness of the sample.

Spatial resolution

The researchers defined the spatial resolution of the system in theory via spatial resolution of the bright field camera and the size of the laser spot. In this work, only the resolution of the bright field camera and motion speed of the probe limited the resolution of the system. For instance, the team obtained images of visualization via augmented reality and reconstructed Raman images to fit the known distribution and spacing of molecular compounds. The resulting images and plots highlighted a reconstruction strategy to strike a balance between the speed of probe movement and the quality of reconstructed Raman spectra, to achieve a spatial resolution approximating 0.5 mm. The results showed the mixed reality visualization to have similar positional accuracy and spatial resolution as augmented reality. Yang et al. compared the achievable spatial resolution with conventional medical imaging methods, including CT and MRI as an image-guided process for clinical applications. The team can also improve spatial resolution with a higher resolution bright field camera and smaller laser spot, to cover a larger area with suitable image quality.

Outlook: Clinical translation of virtual reality-based Raman spectroscopy

Source: Read Full Article