Artificial intelligence can analyze myoclonus severity from video footage

Fast, reliable and automatic assessment of the severity of myoclonic jerks from video footage is now possible, thanks to an algorithm using deep convolutional neural network architecture and pretrained models that identify and track keypoints in the human body. Published in Seizure, the study is a joint effort by the Epilepsy Centre at Kuopio University Hospital, the University of Eastern Finland and Neuro Event Labs.

Myoclonus refers to brief, involuntary twitching of muscles and it is the most disabling and progressive drug-resistant symptom in patients with progressive myoclonus epilepsy type 1 (EPM1). It is stimulus sensitive and its severity fluctuates during the day. In addition, stress, sleep deprivation and anxiety can cause significant aggravation of myoclonic symptoms. Clinical objective follow-up of myoclonus is challenging and requires extensive expertise from the treating physician. Therefore, physicians and the medical industry have been seeking automatic tools to improve the consistency and reliability of serial myoclonus evaluations in order to reliably estimate treatment effect and disease progression.

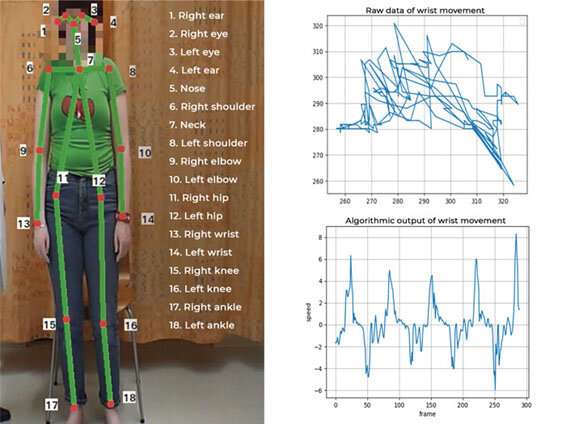

Unified myoclonus rating scale (UMRS), a clinical videorecorded test panel, is the gold standard currently used to evaluate myoclonus. The researchers analysed 10 videorecorded UMRS test panels using automatic pose estimation and keypoint detection methods. The automatic methods were successful in detecting and tracking predefined keypoints in the human body during movement. The researchers also analysed speed changes and the smoothness of movement to detect and quantify myoclonic jerks during an active seizure. The scores obtained using automatic myoclonus detection correlated well with the clinical UMRS myoclonus with action and functional tests scores evaluated by an experienced clinical researcher.

Source: Read Full Article